Announcing py2wasm: A Python to Wasm compiler

py2wasm converts your Python programs to WebAssembly, running them at 3x faster speeds

Syrus Akbary

Founder & CEO

April 18, 2024

Since starting Wasmer five years ago we've been obsessed with empowering more languages to target the web and beyond through Webassembly.

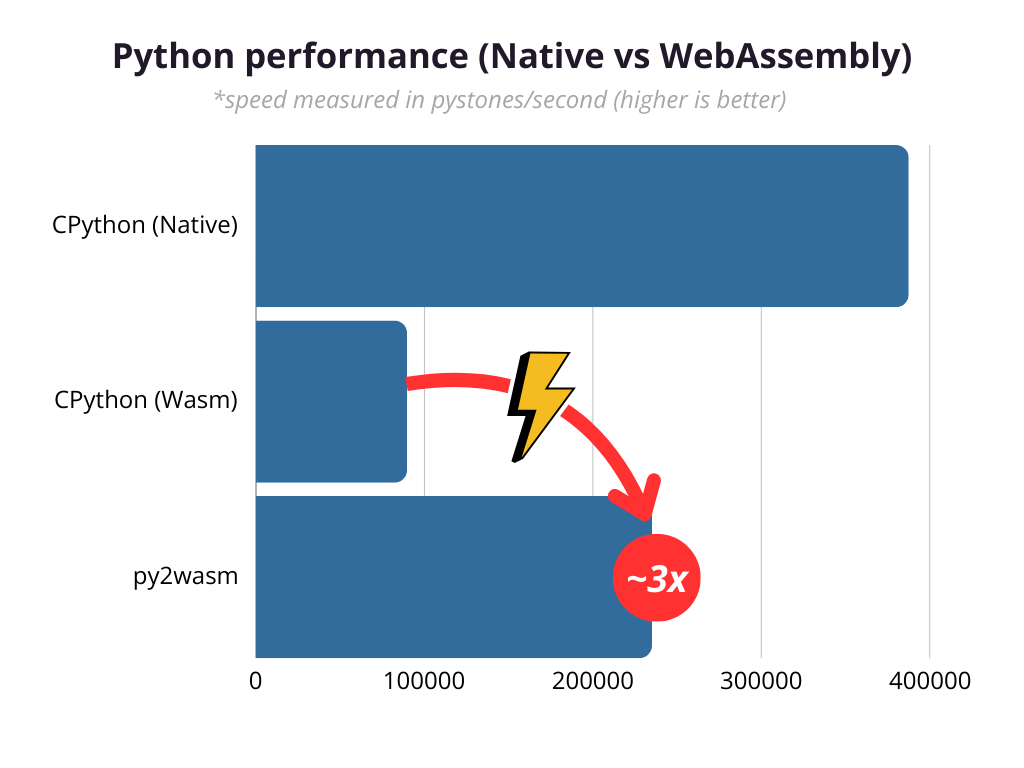

One of the most popular languages out there is Python, and while it is certainly possible to run Python programs in WebAssembly, the performance is not ideal to say the least. *benchmark below

Today we are incredibly happy to announce py2wasm: a Python to WebAssembly compiler that transforms your Python programs to WebAssembly (thanks to Nuitka!) avoiding the interpreter overhead, allowing it to run 3 times faster than with the baseline interpreter!

Here is how you can use it:

$ pip install py2wasm

$ py2wasm myprogram.py -o myprogram.wasm

$ wasmer run myprogram.wasm

Note: py2wasm needs to run in a Python 3.11 environment. You can use pyenv to set Python 3.11 easily in your system:

pyenv install 3.11 && pyenv global 3.11.

Benchmarking

Lets try to get the famous pystone.py benchmark running to compare native Python, regular WebAssembly and py2wasm.

Note: you can check the code used to benchmark in https://gist.github.com/syrusakbary/b318c97aaa8de6e8040fdd5d3995cb7c

When executing Python natively (387k pystones/second):

$ python pystone.py

Pystone(1.1) time for 50000 passes = 0.129016

This machine benchmarks at 387549 pystones/second

When executing the CPython interpreter inside of WebAssembly (89k pystones/second):

$ wasmer run python/python --mapdir=/app:. /app/pystone.py

Pystone(1.1) time for 50000 passes = 0.557239

This machine benchmarks at 89728.1 pystones/second

When using py2wasm via Nuitka (235k pystones/second):

$ py2wasm pystone.py -o pystone.wasm

$ wasmer run pystone.wasm

Pystone(1.1) time for 50000 passes = 0.21263

This machine benchmarks at 235150 pystones/second

In a nutshell: using py2wasm gets about 70% of the Native Python speed… and is about 2.5~3x faster than the baseline!

So, how does this black magic work?

Let's first analyze all the possible strategies that we can think of to optimize Python workloads in WebAssembly.

How to speed up Python in WebAssembly

There are many ways to optimize runtime speed:

- Use a Python subset that can be compiled into performant code

- Use JIT inside of Python

- Use Static Analysis to optimize the generated code

It's time to analyze each!

Compile a Python subset to Wasm

Instead of supporting the full Python feature set, we may want to only target a subset of it that can be optimized much further since not all features need to be supported and we can afford to do some shortcuts:

- ✅ Can generate incredibly performant code

- ❌ Doesn’t support the full syntax or modules

The most popular choices using this strategy are: CPython, RPython (PyPy) and Codon.

Cython

Cython has been around for many years, and is probably the oldest method to accelerate Python modules. CPython is not strictly a subset, since it supports a syntax closer to C. The main goal of Cython is to create performant modules that run next to your Python codebase. However, we want to allow creating completely standalone WebAssembly binaries from our programs.

So unfortunately Cython will not work for speeding up Python executables in Wasm.

RPython

RPython transforms the typed code into C, and then compiles it with a normal C compiler.

PyPy itself is compiled with RPython, which is able to do all the black magic under the hood.

def entry_point(argv):

print "Hello, World!"

return 0

def target(*args):

return entry_point

$ rpython hello_world.py (→ hello-world-c.c ) → hello-world (assembly binary)

$ rpython helloworld.py

$ ./hello-world

"Hello, World!"

However, RPython has many restrictions when running Python. For example, dictionaries need to be fully typed, and this severly limits the programs that we can use it for.

Codon

Codon transforms a subset of Python code into LLVM IR.

While Codon is one of the most promising alternatives and the one that offers the most speedup (from 10 to 100x faster), the subset of Python they support still has many missing features, which prevents using it for most Python code.

Python JITs

Another strategy is to use a JIT inside of Python, so the hot paths of execution are compiled to WebAssembly.

- ✅ Really fast speeds

- ❌ Needs to warm up

- ❌ Not trivial to support with Webassembly (but possible)

One of the most popular ways (if not the most popular) is PyPy.

PyPy

PyPy is a Python interpreter that can execute your Python programs at faster speed than the standard CPython interpreter. It speeds up the execution with a Just In Time (JIT) compiler that kicks in when doing complex computation.

Running a JIT in WebAssembly is not trivial, but is possible.

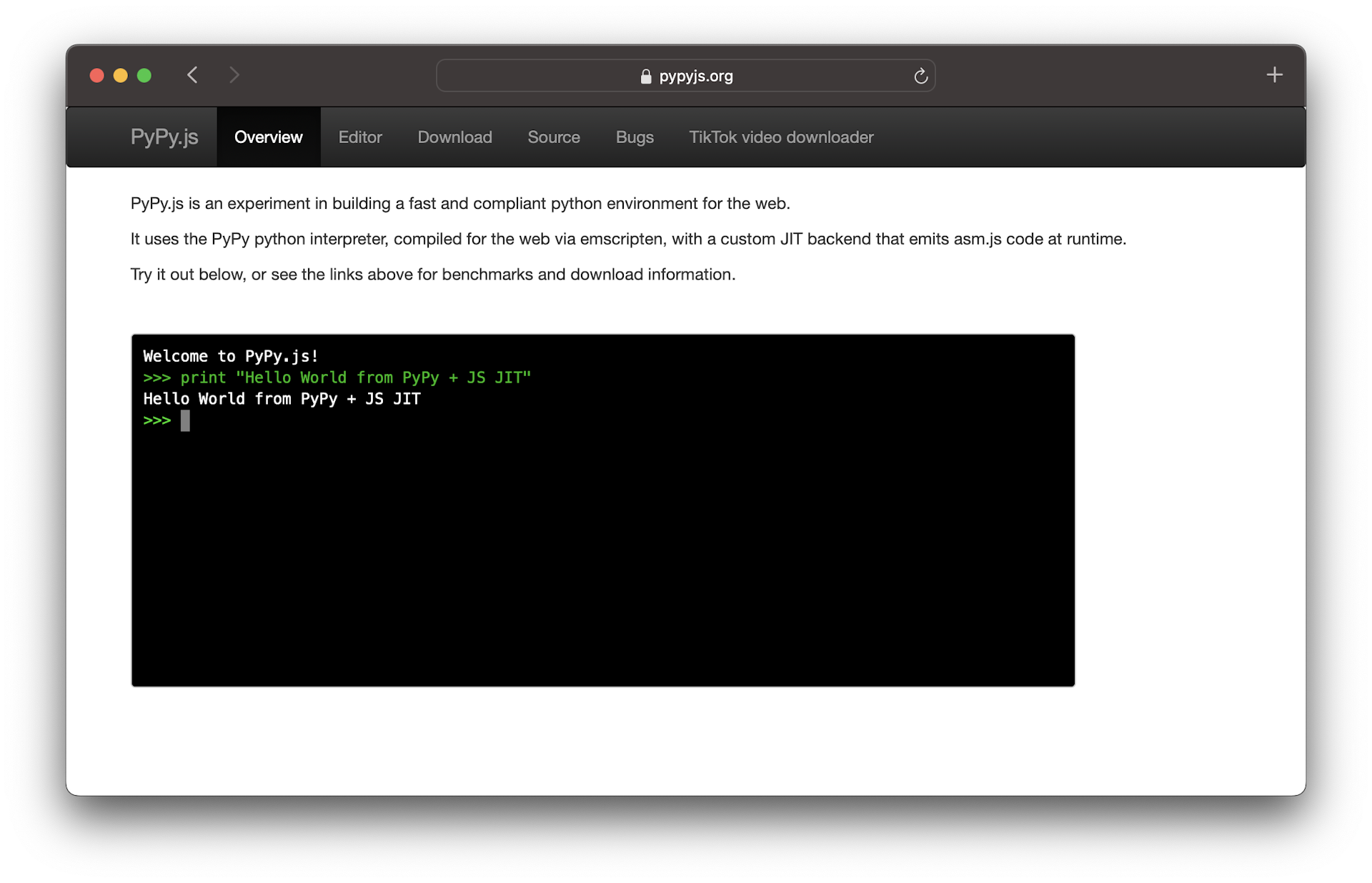

About five years ago, the project pypyjs.org showcased this possibility by creating a new backend for PyPy that targeted Javascript/Asm.js (instead of x86_64 or arm64/aarch64).

You can check the PyPy Asm.js backend implementation here: https://github.com/pypyjs/pypy/tree/pypyjs/rpython/jit/backend/asmjs

For our case, we would need to adapt this backend from outputting Javascript code to Webassembly instead.

It should be totally possible to implement a Wasm backend in PyPy as Pypy.js demonstrated, but unfortunately is not trivial to do so (it may take from a few weeks to a month of work).

Static Analysis

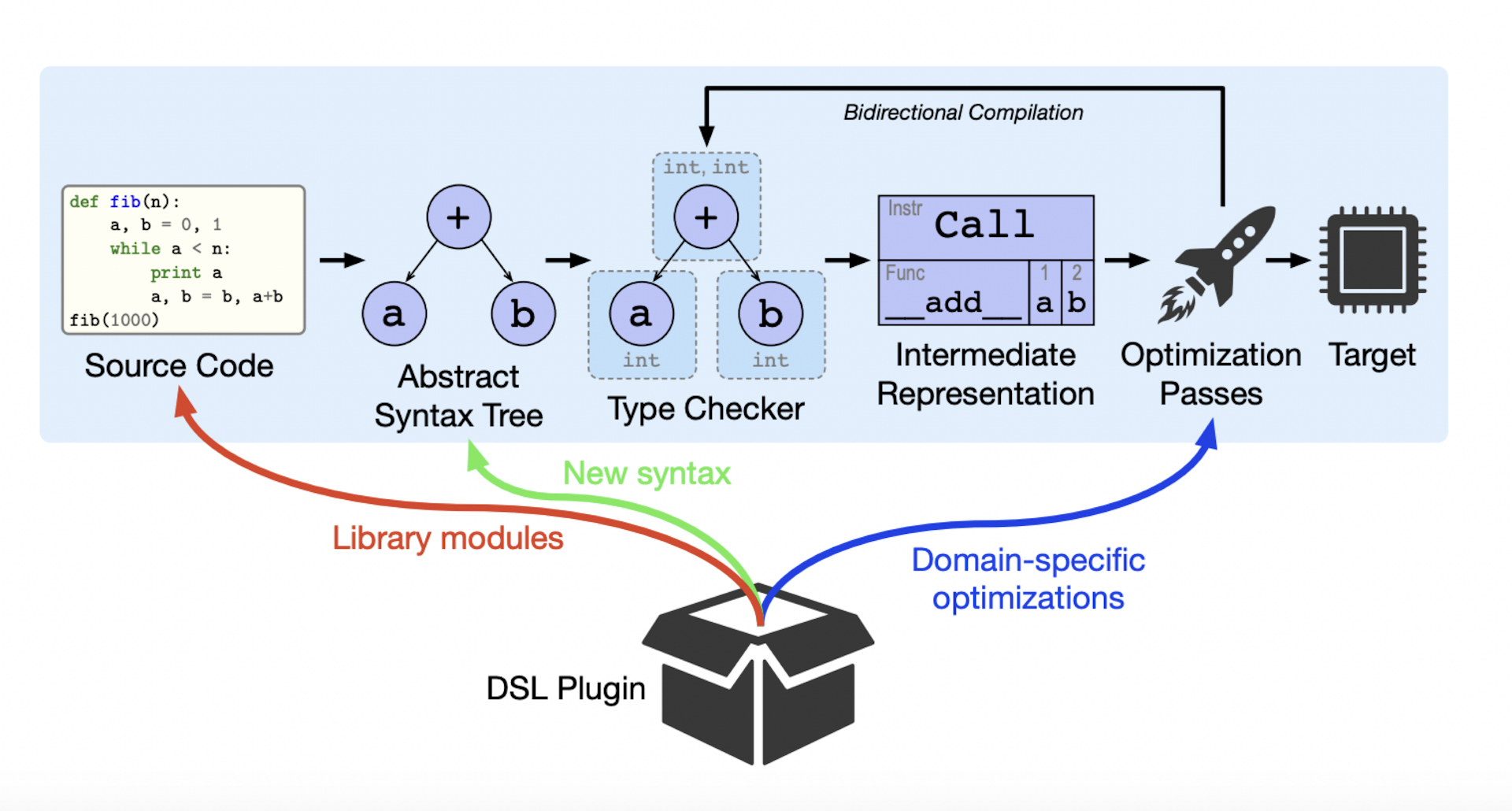

There’s one last strategy that we can try to speed up Python execution speed inside of WebAssembly: static analysis. Thanks to static analysis, we can analyze/autodetect the typings of our program ahead of time, so the code can be transpiled into more performant specializations (usually through Python to C transpilation).

- ✅ Mostly compatible with any Python code and applications

- ❌ Only 1.5-3x faster

- ❌ Complex to get right (from the static analyzer perspective, many quircks)

- ❌ Larger binaries

mypy & mypy-c

Mypy is probably the most popular static analyzer for Python.

The Mypy team also created a mypy-c , which gets all the typing information from Mypy and then transforms the Python code into equivalent C code that runs more performantly.

mypy-c is currently specialized on targeting Python modules that can run inside native Python. In our case, we want to allow creating new standalone WebAssembly binaries from our programs, so unfortunately it seems that mypy-c couldn’t work for our use case.

Nuitka

Nuitka works by transpiling the Python calls that the programs does into C, using the inner CPython API calls. It supports most Python programs, as it transpiles Python code into the corresponding CPython calls.

It can even work as a code obfuscator (no one will be able to decompile your program!)

After a deep analysis of all the options we realized that probably the fastest option to get Python running performantly in WebAssembly was using Nuitka.

Using Nuitka to compile Python to WebAssembly

Nuitka seemed like the easiest option to speed up to Python in WebAssembly contexts, mainly because most of the hard work was already done to transpile Python Code into the underlying CPython interpreter calls, so we could probably do some tweaks to get it working to compile to WebAssembly.

Nuitka doesn't work (yet) with Python 3.12, so we had to recompile Python to 3.11 to WASI and use the generated libpython.a archive, so Nuitka could use this library when targeting WebAssembly and WASI to create the executable.

And things started working... kind of. Once we tried to run the generated Wasm file we realized another issue: because the Nuitka transpiler is executing in a 64 bit architecture, but the generated code is running in a 32 bit architecture (WebAssembly), things were not properly working. Nuitka uses a serialization/deserialization layer to cache the values of certain constants (and accelerate the startup), and while the code was being serialized in 64 bits, the deserialization was done in 32 bits, so there was a bit of mismatch.

Once we fixed this two issues, the prototype was fully working! Hurray! 🎉

We have created a PR to upstream the changes into Nuitka, feel free to take a look here: https://github.com/Nuitka/Nuitka/pull/2814

ℹ️ Right now py2wasm is using a fork of Nuitka, but once changes are integrated upstream we aim to make py2wasm a thin layer on top of Nuitka.

We worked on py2wasm to fulfill our own needs first, as we want to accelerate Python execution to the maximum, so we can move our Python Django backend from Google Cloud into Wasmer Edge.

py2wasm brings us (and hopefully many others) one step closer to running Python backend apps on Edge at an incredible performance providing a much cheaper alternative for hosting these apps than the current cloud providers.

Future Roadmap

In the future, we would like to publish py2wasm as a Wasmer package, so you can just simply execute the following command to get it running. Stay tuned!

wasmer run py2wasm --dir=. -- myfile.py -o myfile.wasm

We hope you enjoyed the article showcasing py2wasm and we can’t wait to hear your feedback on Hacker News and Github!

This article is based on the work I presented in the Wasm I/O conference on March 15th, 2024. You can view the slides in SpeakerDeck, or watch the presentation in Youtube: https://www.youtube.com/watch?v=_Gq273qvNMg

About the Author

Syrus Akbary is an enterpreneur and programmer. Specifically known for his contributions to the field of WebAssembly. He is the Founder and CEO of Wasmer, an innovative company that focuses on creating developer tools and infrastructure for running Wasm

Syrus Akbary

Founder & CEO

Benchmarking

How to speed up Python in WebAssembly

Compile a Python subset to Wasm

Cython

RPython

Codon

Python JITs

PyPy

Static Analysis

mypy & mypy-c

Nuitka

Using Nuitka to compile Python to WebAssembly

Future Roadmap